Build a great website experience for your customer’s real journey. Alternatively, don’t wait them to return to your website: Send the recommendations per e-mail or integrate them into your product. Ensure your leads to come back and engage with your product or service.

Offering content recommendations encourages readers to explore your website more fully and become more engaged. Usually this leads to more conversions. Your customers are used to get personalized content recommendations on the common platforms like News Feeds, Facebook, Instagram, Netflix, Spotify and they get product recommendations when they go shopping. So why wouldn’t you offer them a similar experience that they are used to? By the way, this content recommendation system applies perfectly if you are offering products, Google Analytics 360 and eCommerce tracking is enough.

Google Analytics 360 and BigQuery

If you are using Google Analytics 360 or have imported your hit level Google Analytics data into BigQuery, you can build easily a nice content recommendation system to show links to further content with going beyond links based on text similarities or your guess on which topics might be related. Use the collaborative power of all your visitors instead. There are two different types of recommendation systems. Everybody knows them.

Topics you might be interested in

Item based recommendations are based on similarity between items. This type of content recommendation would offer the readers contextual content to dive into one topic.

Topics others were also interested in

User based recommendations are based on the interactions with other items that similar users have. This type of content recommendation can help your visitors find content faster which other users spent the effort to reveal.

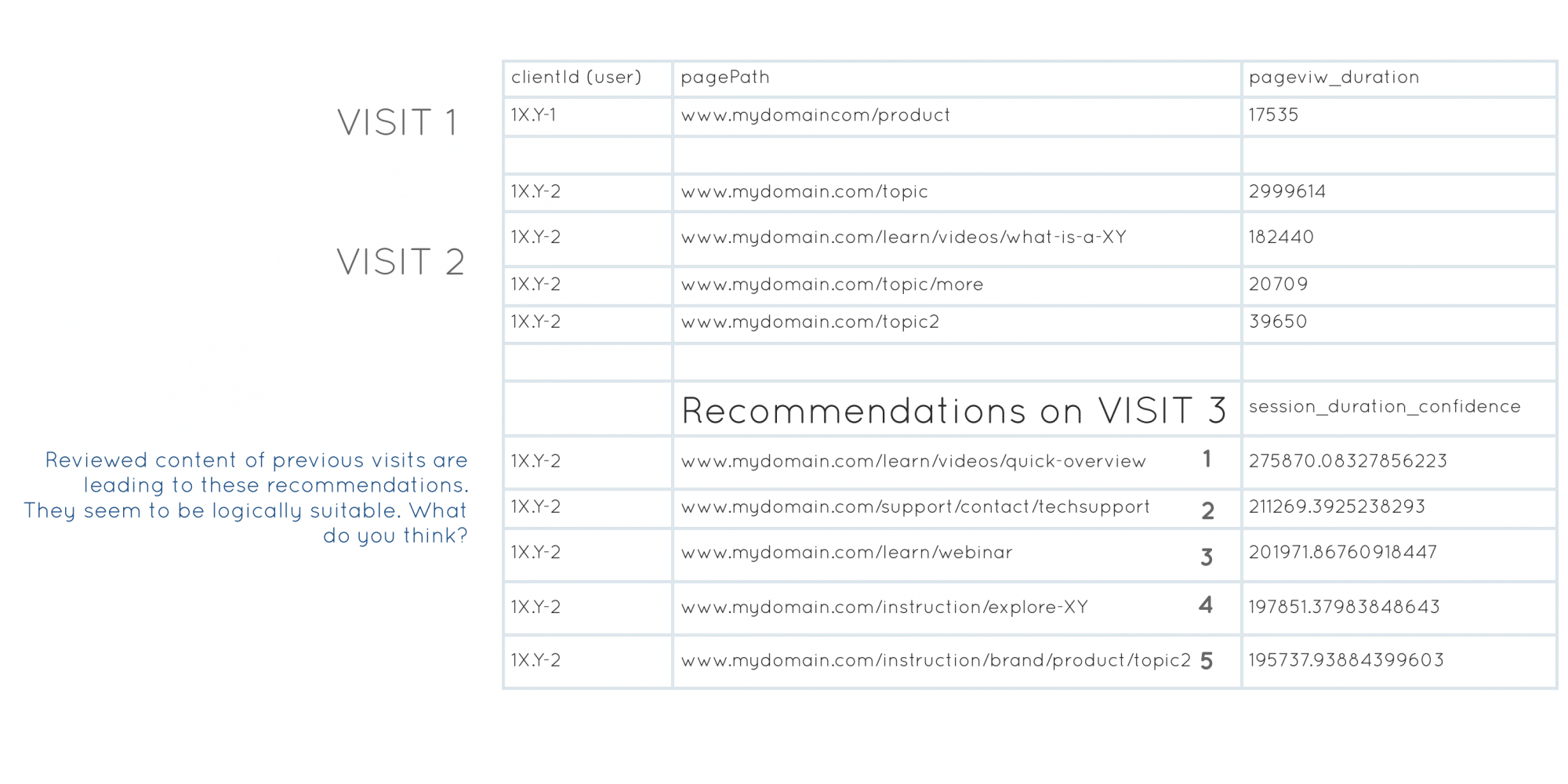

Imagine you can lead the user to more interaction by showing him the pages that other users with similar intent stage and interest also engaged with. The following user is highly interested in the product to solve a specific topic and obviously he is on learning stage. You just can’t solve this challenge by manually planning pages to link with each other. Every user’s pain points and stage in the information to transaction funnel is different.

The recommendations calculate the probability of session duration of possible other content pieces. The content is then sorted by the probability of highest durations and top 5 (or 10 or more…) are recommended. To compare, session duration is kind of an engagement rating, like the ratings of products in product recommendation systems. I think it is a fantastic engagement metric to use for this case.

Recommendations with Matrix Factorization

Google BigQuery ML offers matrix factorization which is a collaborative filtering algorithm to generate user-based recommendations. User-item interaction is the basis. This is commonly used for recommending products. If other users who were interested in this product, are also interested in others, these can be recommended to easy the hard life of the consumer searching for products. Interaction: Pages visited and their session duration as kind of a reliable information if readers liked and engaged with that content. In a product recommendation, this could be the ratings or sales of a product.Creating a recommendation model is as easy as follows. Preparing the data from the Google Analytics 360 hit data took me an hour. I will describe that part later.

Create a recommendation model:

CREATE OR REPLACE MODEL YOUR_DATASET.content_recommender

OPTIONS(model_type='matrix_factorization',

user_col='userId',

item_col='pagePath',

rating_col='session_duration',

feedback_type='implicit'

)

AS

SELECT * FROM YOUR_DATASET.aggregate_web_stats;

You will need a reservation slot to train Matrix Factorization models. Otherwise this query will return an error “Training Matrix Factorization models is not available for on-demand usage. To train, please set up a reservation (flex or regular) based on instructions in BigQuery public docs.” Find here how to reserve a slot: https://cloud.google.com/bigquery/docs/reservations-intro It can be costy to use this model in production. I reserved 100 instead of suggested 500, the initial price was 5% only compared to another test I made with 500 slots.

Request the recommendations for a visitor:

DECLARE MY_USERID STRING DEFAULT "1X.Y-2";

SELECT

*

FROM

ML.RECOMMEND(MODEL `YOUR_DATASET.content_recommender`,

(SELECT MY_USERID as userID)

)

ORDER BY predicted_session_duration_confidence DESC

LIMIT 5;

I like starting with showing the ML Statements, because I love how easy they are and how obviously they show which data is necessary to build any ML model. Some time ago, you had to know Python programming, Google Functions, Date Transfer Systems, etc. to get to this point to create a model. With BigQuery ML you can create models with simple SQL syntax. You need to create a new recommender model only once a while since the website behavior doesn’t change much for the majority of the companies who offer services or products. If you have high amount of current data because of your blogs or news pages, you should update the recommendations more often.

The source code and adjustments you need to applyTheory is very well explained in Building an e-commerce recommendation system by using BigQuery ML. I took this example and changed the eCommerce / Product item type to Page Paths that I wanted. If you want to take the pages that are only visited by your leads, etc. you can add filters to the hit data.

Prepare the content data for the model:

Tipp: Remove popular items from the training data. This is appropriate in cases where users can discover these items on their own, and may not find these recommendations useful. Remove error pages or thank you like pages, they might be visited often but they don’t make any sense as recommended pages.

CREATE OR REPLACE TABLE `YOUR_DATASET.aggregate_web_stats` AS (

WITH durations AS (

--calculate pageview durations

SELECT

CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING),

'-',

CAST(hitNumber AS STRING)

) AS visitorId_session_hit,

LEAD(time, 1) OVER (

PARTITION BY CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING)

)

ORDER BY

time ASC

)- time AS pageview_duration

FROM

`YOUR_PROJECT.YOUR_GA_RAW_HIT_DATASET.ga_sessions_*`,

UNNEST(hits) AS hit

),

prodview_durations AS (

--filter for product detail pages only

SELECT

CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING)

) AS userId,

hits.page.pagePath AS pagePath,

IFNULL(dur.pageview_duration, 1) AS pageview_duration,

FROM

`YOUR_PROJECT.YOUR_GA_RAW_HIT_DATASET.ga_sessions_*` t,

UNNEST(hits) AS hits

JOIN durations dur ON CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING),

'-',

CAST(hitNumber AS STRING)

) = dur.visitorId_session_hit

WHERE

hits.page.pagePath like '%INSERT_PAGE_PATH_PATTERN_HERE%'

),

aggregate_web_stats AS(

--sum pageview durations by userId, pagePath

SELECT

userId,

pagePath,

SUM(pageview_duration) AS session_duration

FROM

prodview_durations GROUP BY userId,

pagePath

)

SELECT *

FROM

aggregate_web_stats);

Save all recommendations for all users:

-- Create output table of top 5 predictions

CREATE OR REPLACE TABLE `YOUR_DATASET.page_recommendations` AS (

WITH predictions AS (

SELECT

userId,

ARRAY_AGG(STRUCT(pagePath,

predicted_session_duration_confidence)

ORDER BY

predicted_session_duration_confidence DESC

LIMIT 5) as recommended

FROM ML.RECOMMEND(MODEL `YOUR_DATASET.page_recommender`)

GROUP BY userId

)

SELECT

userId,

pagePath,

predicted_session_duration_confidence

FROM

predictions p,

UNNEST(recommended)

);

-- Show table

SELECT

*

FROM

`YOUR_DATASET.page_recommendations`

ORDER BY

userId

LIMIT

10;

Data Reduction For B2B Companies

If you know which visitors of yours are interesting, means they are business users, you can build the model only for those visitors and access the personalized data for them only. You will reduce the amount of your data used for the engine and predictions. The recommendations return faster, and they might fit to your target segment more. Because the non-interesting user behavior might distract in a recommendation engine for your actual target group. A further reduction is possible if you take the behavior of your leads only.

We can help you to reveal business users, feel free to contact us if you need assistance.

How to show the content recommendations on your website

Model is built so now you have to do two things. I assume the project management and communication part is already been done, which can take most of the time. Like in all data projects, right.

Turn the model into an engine. Recommendations must be updated after visits. Depending on your user retention cycle, a daily update might be enough. For a website with millions of visitors, it can take around 30 minutes to build the model, get and save predicted recommendations for all your visitors to have them ready when the visitor returns. Your visitor must see the recommendations at his next visit.

A Cloud Function as a Micro Service will deliver you the recommendations for clientID in real-time. You can call it per Custom Javascript in GTM and make the results available in your data layer, so the website can show it. Optimize can help you to personalize first and test if the personalization shows effects.

Accessing BigQuery Data per Cloud Function will not satisfy because it will take too long. You must either query all data and filter by ClientId which will be too slow on so much data, and be costy, too. Or you must make a real time prediction, this takes 5 seconds, may be. And then accessing the data by function will also take time. Either way, it will take too long.

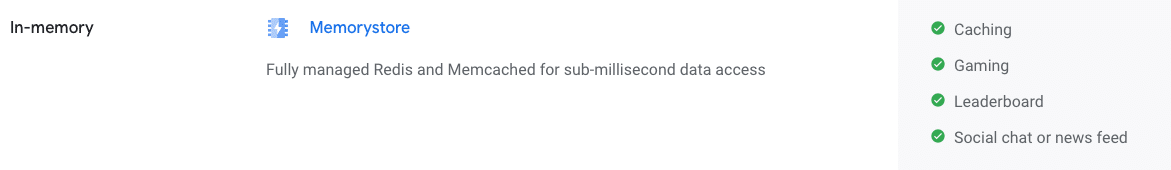

Now, challenge is to access the recommendations within 500 milliseconds, a half of a second. Otherwise, it is too late. We will export the recommendations to Memorystore. Cloud Function will access data here.

Further possibilities to process the recommendations in Analytics:

- From the Analytics user interface, using the Admin > (Property) > Data Import option

- Using the Analytics Management API

- Using MoDeM (Model Deployment for Marketing)

Last option will not only help uploading data into Analytics, additionally, it transforms the recommendations into an audience list for eventual activation in Google Ads, Display & Video 360 and Search Ads 360).

Read here for operationalizing the recommendations in production, to use them more around your Marketing and Sales system: Building an e-commerce recommendation system by using BigQuery ML or https://cloud.google.com/bigquery-ml/docs/bigqueryml-mf-implicit-tutorial

Things to keep in mind

How to use the Content Recommendations for improving Customer Experience

Get predictions for all users and save the results. By doing this regularly, you have the recommendations ready for the next touch point. Now, you can export them to your CRM to integrate them in E-Mails, in your sales interactions, etc.

SEO & Link Juice

If you personalize some links, or change replace links, you should talk to your SEO department first. If you pay attention, you won’t harm your SEO performance.

Attention to BIAS

Bias is a tendency to lean in a certain direction. If your visitors start clicking these content recommendations more heavily, the recommendation algorithm might use them more in the future as recommendations, too. Theoretically, this should be fine because it causes no harm, but I don’t have the necessary observation to comment this behavior yet.

Costs

Unfortunately, you will need reserved slots to keep the engine running, which can get costy. I suggest you make some tests first to see the effects and the costs before you set the project into production.

Normalization of numeric value

If session durations are not normally distributed – if they have outliers, etc. you might think of normalizing them. BigQuery ML normalizes numeric values like “session duration” in this use case. Still, if you want to see what can change if you adjust the model to your wishes. Here is an add-on to the code that normalizes session duration by clipping them. Apply it, build a new model and compare your results!

Prepare data:

CREATE TEMPORARY FUNCTION CLIP_LESS(x FLOAT64, a FLOAT64) AS (

IF (x < a, a, x)

);

CREATE TEMPORARY FUNCTION CLIP_GT(x FLOAT64, b FLOAT64) AS (

IF (x > b, b, x)

);

CREATE TEMPORARY FUNCTION CLIP(x FLOAT64, a FLOAT64, b FLOAT64) AS (

CLIP_GT(CLIP_LESS(x, a), b)

); #temporary functions for session duration normalization

CREATE OR REPLACE TABLE `YOUR_DATASET.aggregate_web_stats` AS (

WITH durations AS (

--calculate pageview durations

SELECT

CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING),

'-',

CAST(hitNumber AS STRING)

) AS visitorId_session_hit,

LEAD(time, 1) OVER (

PARTITION BY CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING)

)

ORDER BY

time ASC

)- time AS pageview_duration

FROM

`YOUR_PROJECT.YOUR_GA_RAW_HIT_DATASET.ga_sessions_*`,

UNNEST(hits) AS hit

),

prodview_durations AS (

--filter for product detail pages only

SELECT

CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING)

) AS userId,

hits.page.pagePath AS pagePath,

IFNULL(dur.pageview_duration, 1) AS pageview_duration,

FROM

`YOUR_PROJECT.YOUR_GA_RAW_HIT_DATASET.ga_sessions_*` t,

UNNEST(hits) AS hits

JOIN durations dur ON CONCAT(

clientId,

'-',

CAST(visitNumber AS STRING),

'-',

CAST(hitNumber AS STRING)

) = dur.visitorId_session_hit

WHERE

hits.page.pagePath like '%INSERT_PAGE_PATH_PATTERN_HERE%'

),

aggregate_web_stats AS(

--sum pageview durations by userId, pagePath

SELECT

userId,

pagePath,

SUM(pageview_duration) AS session_duration

FROM

prodview_durations GROUP BY userId,

pagePath

) ,

#normalize session duration to avoid outlier effects: scale and clip it the values by the median session duration (average duration will be dramatically affected by the outliers)

normalized_session_duration AS (

SELECT

APPROX_QUANTILES(session_duration,100)[

OFFSET

(50)] AS median_duration

FROM

aggregate_web_stats )

SELECT

* EXCEPT(session_duration,

median_duration),

CLIP(0.3 * session_duration / median_duration,0,1.0) AS normalized_session_duration

FROM

aggregate_web_stats,

normalized_session_duration );

Get Predictions:

DECLARE MY_USERID STRING DEFAULT "1X.Y-2";

SELECT

*

FROM

ML.RECOMMEND(MODEL `YOUR_DATASET.content_recommender`,

(SELECT MY_USERID as userID)

)

ORDER BY predicted_normalized_session_duration_confidence DESC

LIMIT 5;

Build Model:

CREATE OR REPLACE MODEL YOUR_DATASET.content_recommender

OPTIONS(model_type='matrix_factorization',

user_col='userId',

item_col='pagePath',

rating_col='normalized_session_duration',

feedback_type='implicit'

)

AS

SELECT * FROM YOUR_DATASET.aggregate_web_stats;