Google Scholar is undoubtedly the most popular scholarly literature web search engine platform in the world. Its logic is quite similar to Google Search when a user looks for results, but they get indexes of full text or metadata of scholarly literature across an array of publishing formats and disciplines. Now even more and more people are using Google Scholar and looking for different types of publications, extending your target segment has become essential in terms of achieving success in Google Scholar.

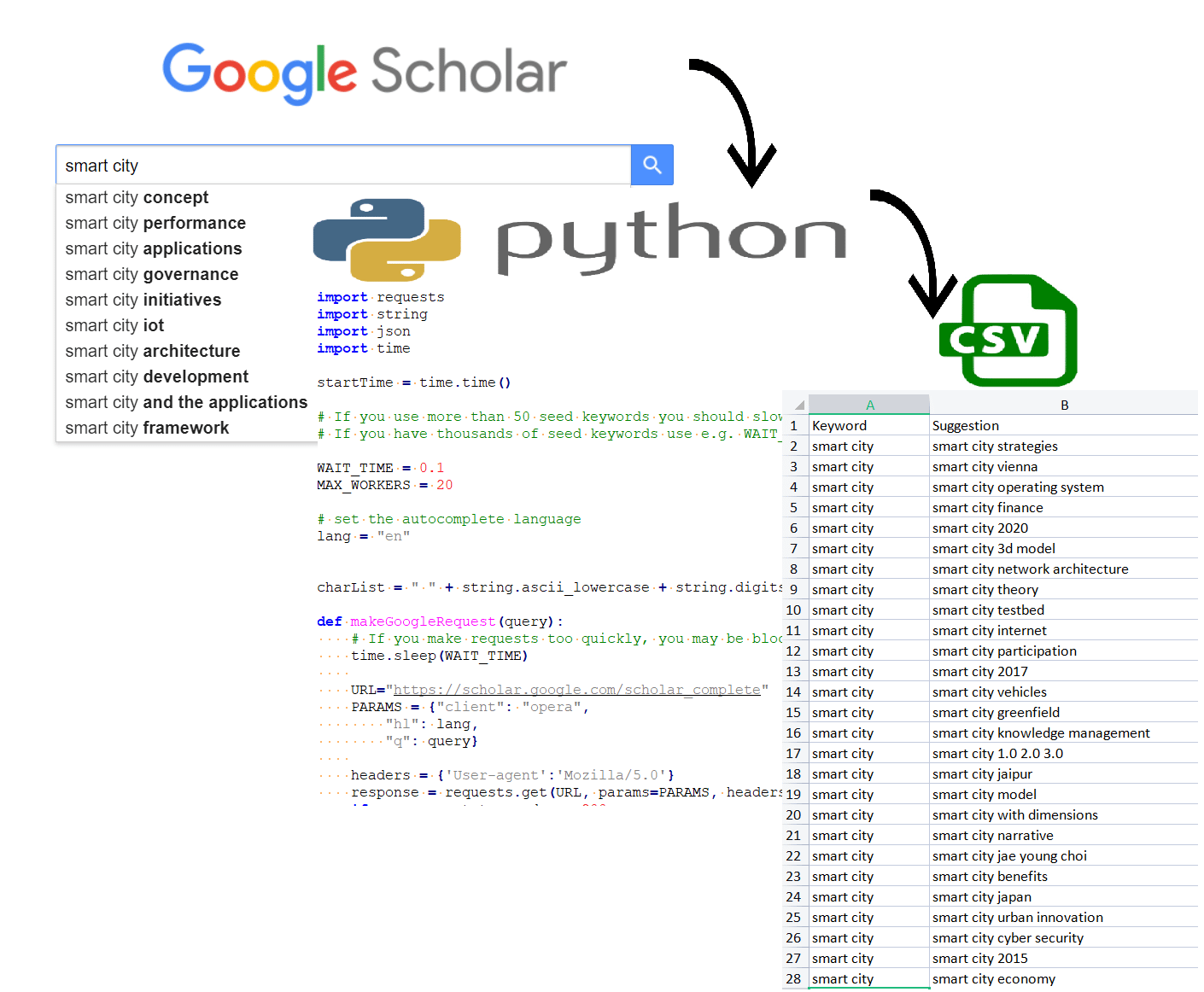

As a Google Scholar contributor, you may wonder how to target more publications. In this post, we’ll show how to scrape Google Scholar Autocomplete so that you can reach a larger target segment for your publications.

How to use the Google Scholar Autocomplete Scraper Script

This script generates autocomplete suggestions from input seed keywords for research and SEO.

- Put your seed keywords into keyword_seeds.csv

- Run the main.py script

- Look at results in keyword_suggestions.csv

With the current settings, you’ll fetch the autocomplete results of 50 seed keywords in less than one minute. If you have thousands of seed keywords, you have to slow down the requests:

- WAIT_TIME:

The time delay between the autosuggest lookups for each seed keyword. Set this to 1 or 2 seconds and you should also be fine for thousands of keywords. Of course, this will increase the run time of your script. - MAX_WORKERS:

We scrape keywords in parallel, which reduces the run time. If you have too many workers the total requests per second might be too high for Google and the script can be blocked again. Then reduce this value to 5 or 10.

You can check how you can use Google Scholar Autosuggest with this easy-to-use python script:

# Pemavor.com Google Scholar Autocomplete Scraper

# Author: Stefan Neefischer (stefan.neefischer@gmail.com)

import concurrent.futures

import pandas as pd

import itertools

import requests

import string

import json

import time

startTime = time.time()

# If you use more than 50 seed keywords you should slow down your requests - otherwise google is blocking the script

# If you have thousands of seed keywords use e.g. WAIT_TIME = 1 and MAX_WORKERS = 10

WAIT_TIME = 0.1

MAX_WORKERS = 20

# set the autocomplete language

lang = "en"

charList = " " + string.ascii_lowercase + string.digits

def makeGoogleRequest(query):

# If you make requests too quickly, you may be blocked by google

time.sleep(WAIT_TIME)

URL="https://scholar.google.com/scholar_complete"

PARAMS = {"client": "opera",

"hl": lang,

"q": query}

headers = {'User-agent':'Mozilla/5.0'}

response = requests.get(URL, params=PARAMS, headers=headers)

if response.status_code == 200:

try:

suggestedSearches = json.loads(response.content.decode('utf-8'))["l"]

except:

suggestedSearches = json.loads(response.content.decode('latin-1'))["l"]

return suggestedSearches

else:

return "ERR"

def getGoogleSuggests(keyword):

# err_count1 = 0

queryList = [keyword + " " + char for char in charList]

suggestions = []

for query in queryList:

suggestion = makeGoogleRequest(query)

if suggestion != 'ERR':

suggestions.append(suggestion)

# Remove empty suggestions

suggestions = set(itertools.chain(*suggestions))

if "" in suggestions:

suggestions.remove("")

return suggestions

#read your csv file that contain keywords that you want to send to google scholar autocomplete

df = pd.read_csv("keyword_seeds.csv")

# Take values of first column as keywords

keywords = df.iloc[:,0].tolist()

resultList = []

with concurrent.futures.ThreadPoolExecutor(max_workers=MAX_WORKERS) as executor:

futuresGoogle = {executor.submit(getGoogleSuggests, keyword): keyword for keyword in keywords}

for future in concurrent.futures.as_completed(futuresGoogle):

key = futuresGoogle[future]

for suggestion in future.result():

resultList.append([key, suggestion])

# Convert the results to a dataframe

outputDf = pd.DataFrame(resultList, columns=['Keyword','Suggestion'])

# Save dataframe as a CSV file

outputDf.to_csv('keyword_suggestions.csv', index=False)

print('keyword_suggestions.csv File Saved')

print(f"Execution time: { ( time.time() - startTime ) :.2f} sec")Google usually gives about 10 autosuggest keywords. Let’s give you an amazing hint on how we increase your scholar autocomplete results. For each keyword, you want to search about its autosuggestion, we add space then character to it (a-z,0-9). For example, for “smart city”: smart city a, smart city b,…….., smart city z, smart city 0, smart city 1, ………., smart city 9. According to this strategy, you’ll get about 350 autosuggest for each keyword instead of 10 results.