Sample size is crucial for making decisions in PPC… One big goal of keyword clustering is to bring sample sizes up to enable decisions on stable numbers.

Why should you cluster keywords or Search Terms in PPC?

Sample size is crucial for making decisions in PPC. Maybe you want to block some parts of your traffic using negative keywords, or you want to spend more of your budget on search terms / keywords that bring value to your website. For both tasks, you’ll struggle when you have stable conversion rates to judge performance.

One big goal of keyword clustering is to bring sample sizes up to enable decisions on stable numbers.

Why clustering is challenging for big keyword sets?

We already posted some approaches to cluster keywords (also look at our Free Online Clustering Tool) based on algorithms like k-means by making use of vector representations of the keyword (TF-IDF).

The above approach works fine for some thousands of keywords, but you’ll run into trouble when you want to process more than 500,000 Keywords:

- Processing time is getting high.

- The memory usage of your computer is getting too high.

Google made some changes in how they match queries to keywords (close variants) which causes an exploding number of search terms. They also made some updates and search terms started to be included in the Search Term Performance Report, which increased the number of Queries by 5–7 times.

Hands on: Clustering Google Shopping Queries

How does the initial data set look like?

My input data is about 130 MB in size and contains 2,500,000 search terms. In addition to the Queries, we also added Clicks, Conversions, ConversionValue, Cost and Impression data. Let’s have a look at the distribution of Clicks per Query:

| Rule | # Queries |

| Clicks = 1 | 1.650.000 |

| Clicks > 1 | 830.000 |

| Clicks > 10 | 120.000 |

| Clicks > 100 | 12.600 |

| Clicks > 1000 | 1.000 |

More than 50% of all Queries got just one click. Less than 1% of the search terms have greater than 100 Clicks, which is still low if you want to get stable conversion rates in our case.

To sum it up: It’s impossible to make scalable actions by just looking at full queries. Let’s start grouping keywords together.

Define possible keyword clusters based on rules

Our goal is to assign keywords with low sample sizes to the next best cluster, where we can make a good guess for its expected performance. User searches follow a hierarchy—by adding words, the search gets more specific:

We’ll use this approach for running a cluster logic. Based on the following rules. We want to assign each query to the next best cluster when we go one step up in that hierarchy.

- Rule 1: We create possible clusters only based on search queries that found somewhere in the big keyword list. This means that phrases which never appear in a standalone way aren’t considered as a valid cluster. E.g., “buy”, “review” are words that are often used within user queries, but they appear never in a standalone way.

- Rule 2: A valid cluster, which should be used to assign more specific queries, should have a minimum number of samples/observations. We used a threshold of 500 clicks in our code example.

It becomes more clear when you look at some examples:

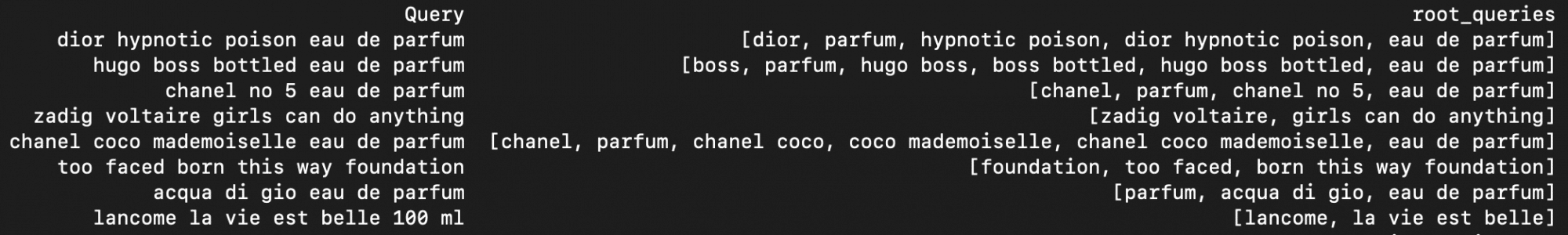

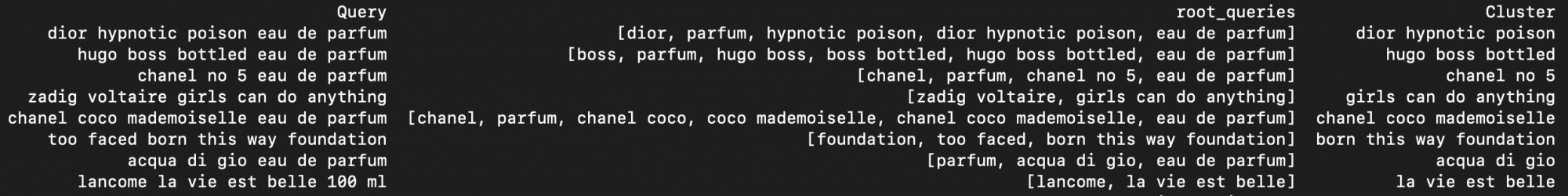

The Query column contains the search term we want to cluster. The column “root_queries” contains a set of queries that would be a valid cluster.

Selecting the most relevant keyword cluster

You’ll realize we have more than one option available. Let’s have a look at the first Query from my screenshot:

“dior hypnotic poison eau de parfum” can be assigned to:

- dior

- parfum

- hypnotic poison

- dior hypnotic poison

- eau de parfum

All possible clusters appear as standalone query in the big keyword list of 2.500.000 keywords and all of them had at least greater than 500 Clicks. If we have to make a decision now to choose just one cluster, which one we should choose?

“parfum” has by far the most clicks of all clusters but this is probably not a good choice as a cluster. When it comes to relevancy, we should come from the other end: the possible clusters with the minimum click numbers are more close to the query we want to cluster. In our example, “dior hypnotic poison” would be the chosen cluster. Let’s add the final rule to the clustering logic:

- Rule 3: If there are multiple cluster options, choose the cluster with the minimum number of Clicks.

After running this logic on the full keyword set, we were able to assign 1.500.000 (out of 2.500.000) queries that have less than 100 clicks (difficult to judge performance) to the next best cluster. Based on that, we can make a good estimation about the keyword performance.

Here is our Python code. You can easily adjust it to your needs:

# Cluster PPC Keywords

# Author: Stefan Neefischer

import pandas as pd

from collections import defaultdict,OrderedDict

from itertools import combinations

import numpy as np

import swifter

from nltk.stem import PorterStemmer

import re

from nltk import ngrams

roots = defaultdict(lambda:0)

root_clicksum = defaultdict(lambda:0)

# What is a valid Cluster?

MIN_CLICKS = 500

# Read Queries

df = pd.read_csv('PLA Queries 2.csv',sep=";")

def defineRoots(query,kpi):

global roots

if (len(str(query).split(" ")) <= 4) and kpi > MIN_CLICKS :

roots[query] = kpi

def getWordcount(query):

return(len(query.split(" ")))

def lookupRoots(query,nr_words,clicks):

global roots, attribute_words, root_clicksum

ngramlist = []

onegrams = ngrams(query.split(), 1)

twograms = ngrams(query.split(), 2)

threegrams = ngrams(query.split(), 3)

fourgrams = ngrams(query.split(), 4)

rootlist = []

found = defaultdict()

for gram in onegrams:

if (gram[0] in roots):

rootlist.append(gram[0])

root_clicksum[gram[0]] += clicks

found[gram[0]] = 1

for gram in twograms:

key = str(gram[0]) + " " + str(gram[1])

if (key in roots):

rootlist.append(key)

root_clicksum[key] += clicks

found[gram[0]] = 1

found[gram[1]] = 1

for gram in threegrams:

key = str(gram[0]) + " " + str(gram[1]) + " " + str(gram[2])

if (key in roots):

rootlist.append(key)

root_clicksum[key] += clicks

found[gram[0]] = 1

found[gram[1]] = 1

found[gram[2]] = 1

for gram in fourgrams:

key = str(gram[0]) + " " + str(gram[1]) + " " + str(gram[2])+ " " + str(gram[3])

if (key in roots):

rootlist.append(key)

root_clicksum[key] += clicks

found[gram[0]] = 1

found[gram[1]] = 1

found[gram[2]] = 1

found[gram[3]] = 1

#print("----------------- "+str(query)+" -------------------")

#print(rootlist)

return(rootlist)

attributes = []

for word in query.split(" "):

if word not in found and rootlist != []:

attributes.append(word)

attribute_words[word] += 1

def selectCluster(clusteroptions):

global root_clicksum

mincount = -1

cluster = "undef"

#print("------------")

for option in clusteroptions:

clicks = root_clicksum[option]

#print(option)

#print(clicks)

if (cluster == "undef"):

cluster = option

mincount = clicks

else:

if clicks < mincount:

cluster = option

mincount = clicks

return cluster

df.apply(lambda row: defineRoots(row['Query'],row['Clicks']),axis=1)

df["nr_words"] = df.apply(lambda row: getWordcount(row['Query']),axis=1)

df["root_queries"] = df.apply(lambda row: lookupRoots(row['Query'],row["nr_words"],row['Clicks']),axis=1)

df["Cluster"] = df.apply(lambda row: selectCluster(row['root_queries']),axis=1)

print(df)