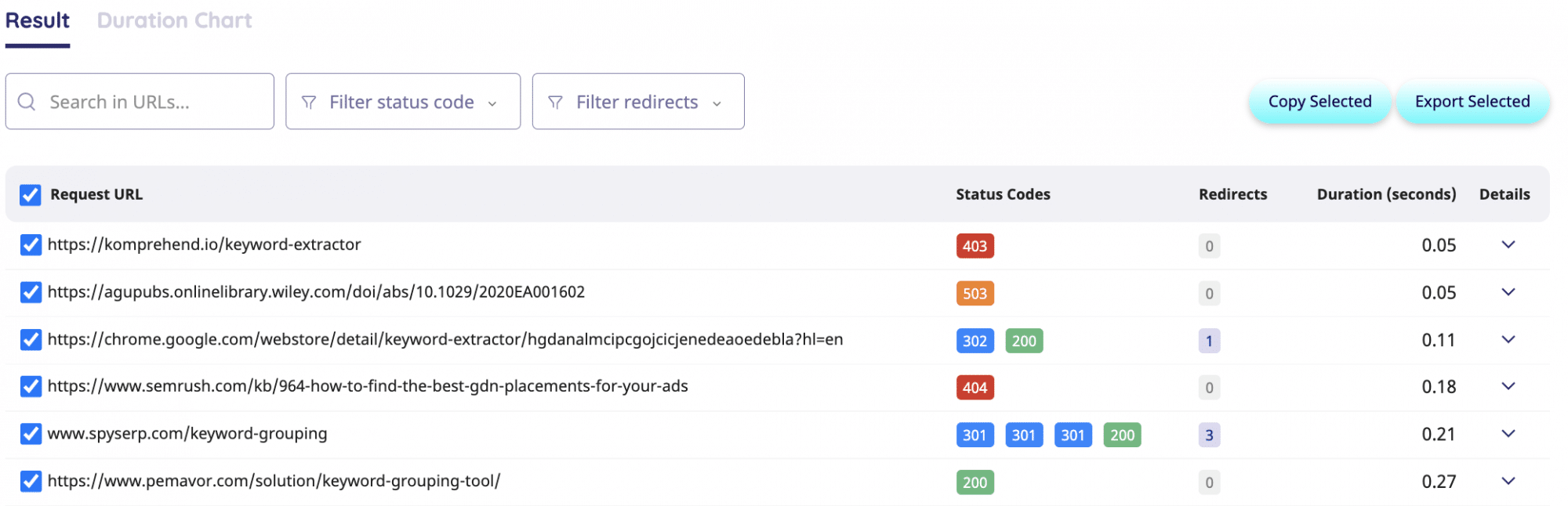

403, 503, 200… 301? And more. Of course, it would be nonsense to keep all of them in mind. Checking one by one all pages’ codes are equivalent to wasting time.

Whereas, numerous URL status codes are key aspect of website performance. These codes provide valuable insights into the availability and functionality of webpages, which can impact SEO, paid search campaigns, and overall website monitoring.

There are now free online tools or Python scripts approaches to checking status codes. We have listed the best methods that will work for you.

Free Online Tool: Status Code Checker for 100 URLs

Before you go on running your own Python status code checker script, we might have a more convenient solution for you: We have a free online tool available that is making bulk status code checks up to 100 URLs. In addition to that, it’s also possible to do internal link checking for a single URL: Every link on that webpage is extracted and send to your status code checker tool.

Check URL Availability for Paid Search Campaigns

When you send paid traffic to URLs that do not work it’s a waste of money and it should be fixed right away. If you want to check your status codes for your Google Ads destination pages you find some Google Ads scripts for checking the URL status codes. This might work for smaller accounts and if you stay within the UrlFetchApp limits of 20.000 URLs per day. Otherwise you have to partition your URL data and it takes days to complete the status check of your full URL list. In many real world scenarios this is not an option.

If you have to check your full landing page set within a few hours, e.g. when you launch a new website or shop system, you can use a simple Python script that is doing the job. On my machine it took me 30 seconds to check a list of 100 URLs. This means you’re able to check 12.000 status codes per hour.

Check Website Availability

If you want to monitor the availability of various different websites you can also use the code. Instead of specific URLs for one website you can also run availability check for different websites. You can easily set up a cron job that is running these status code checks every 5 minutes to never miss problems with your website availability. If you want to check website availability in a professional way have a look at PRTG Network Monitor – Website Availability Checks are easily covered with the free version.

Check URL status codes for SEO

If you’re a SEO professional you know the problem of bad status codes. With that python code you can easily check all your sitemap URLs in Bulk and check for all status codes that aren’t valid. You can run the same approach also on all links, internal and external, on your website. When something is broken you should fix it right away. You can automate URL status code checks with cron jobs and Slack Notifications. In this solution we showed how to monitor all status codes of URLs within the sitemap and also the internal links on the website.

Set up the URL Status Code Checker

You can use the Python Script to cover url checking use cases like mentioned by following these steps:

1) Copy all your URLs to urls.csv. Put it in the same folder like your python script.

2) Run the script and wait

3) Look at the result in urls_withStatusCode.csv. For every URL an additional column with the http status code was added.

# status code checker

import requests

import csv

import time

SLEEP = 0 # Time in seconds the script should wait between requests

url_list = []

url_statuscodes = []

url_statuscodes.append(["url","status_code"]) # set the file header for output

def getStatuscode(url):

try:

r = requests.head(url,verify=False,timeout=5) # it is faster to only request the header

return (r.status_code)

except:

return -1

# Url checks from file Input

# use one url per line that should be checked

with open('urls.csv', newline='') as f:

reader = csv.reader(f)

for row in reader:

url_list.append(row[0])

# Loop over full list

for url in url_list:

print(url)

check = [url,getStatuscode(url)]

time.sleep(SLEEP)

url_statuscodes.append(check)

# Save file

with open("urls_withStatusCode.csv", "w", newline="") as f:

writer = csv.writer(f)

writer.writerows(url_statuscodes)

If the approach is still not fast enough you can run the status code check also in parallel. This will improve the run time a lot. Please ask your IT department how many requests per second are acceptable.

What do the different http status codes mean?

- Status Code 200: Everything fine! The URL response code is OK. No action required.

- Status Code 3XX: Redirect Response. Normally there is no urgent action required.

- Status Code 4xx: Bad Request because of client error, actions are required when you send PPC Traffic to URLs with that status code. Also links on your website with that status should be reviewed and fixed.

- Status Code 5xx: Bad Request because of server error. This is something the server admins should have a look at.

Do you need a custom solution with Python?

One-size-fits-all solutions can’t quite meet everybody’s unique needs. We know that. It’s time to explore the endless possibilities for you. We can provide a custom Python solution. Right now, contact us.