Google seems to have big plans for Smart Bidding. One can expect that they can alter their product in many ways for success. Close variants could be the ultimate move to push Smart Bidding into the market.

Everything happens for a reason at Google. It’s heavily pushing Smart Bidding. They repeat it again and again and again for years. Why are they doing this? And why is the adoption rate too low? One is that rolling out Smart Bidding is the top goal – and it would be surprising if they don’t look for ways to achieve this by changing something in their product.

The second big thing that the changes for exact match keywords. What is the reason for doing this when you have modified broad or broad match types? They were built for covering all those close variants, weren’t they? Why does Google do this also for exact matches now?

Who defines what is significant?

Lately we started to think that both these issues are connected. First of all, what does it mean when Google removes the exact match types?

We’re a big fan of single keyword ad groups – especially with exact match keywords (before close variants went crazy). It’s all about control:

- The traffic is clean by default, there is no need of adding negative keywords.

- Ad copies and landing pages will perfectly match to the keyword

- You can bid very aggressively on those keywords because they were predictable in the past

With that in mind, it was a great setup to use e.g. modified broad matches to catch new queries and add the good ones as a new exact keyword. The bad patterns were added as negative keywords. A great process for getting a high quality, continuous growing account.

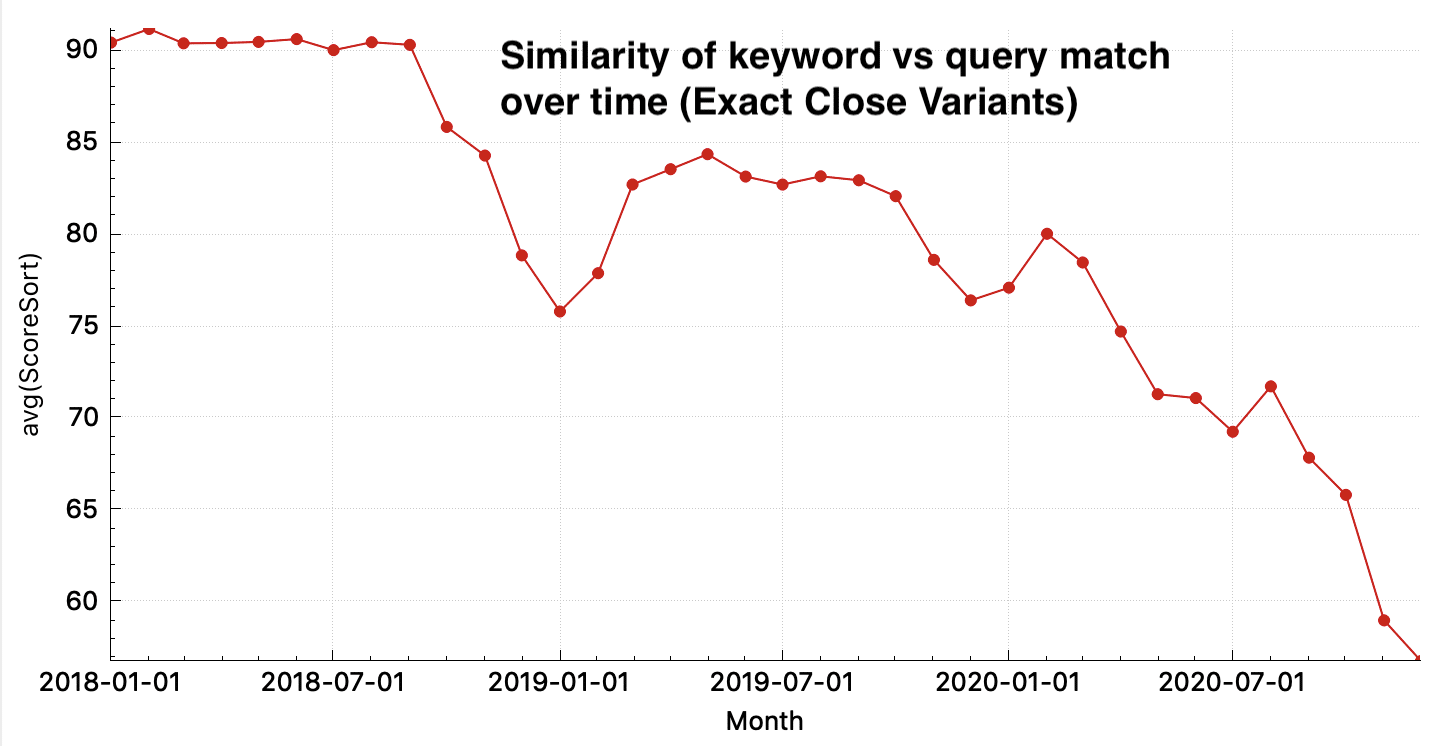

Now the predictability of exact matches is gone because close variants are used more and more in a fuzzier way even for exact keywords. This is the way the development looks like for one big account in the US market between 2018-2020. You can find more detail about the similarity calculation here and a free online tool for calculating matching quality for your own data:

Google is pushing hard on getting the matchings more fuzzy. The recent changes of hiding “not significant” queries in the search term performance report makes it even more difficult to track and understand what is happening. We’re sure, everything happens for a reason at Google.

Close variants can be a game changer

Google seems to have problems with Smart Bidding adoption rate. There are so many smart PPC professionals out there that are open for ongoing testing but never switched to Smart Bidding. They tested – maybe several times – but keep on using manual bidding.

Every change in matching logic will bring down the performance of manual bidding systems and make Smart Bidding more superior.

Manual bidding lost control.

Close variants could be a game changer for Google’s Smart Bidding now: They have the only system on the market that is able to bid on query level. They can pay less for fuzzy matches and more for close matches. This is impossible using manual bidding. Every change in matching logic will bring down the performance of manual bidding systems and make Smart Bidding more superior. Manual bidding lost control.

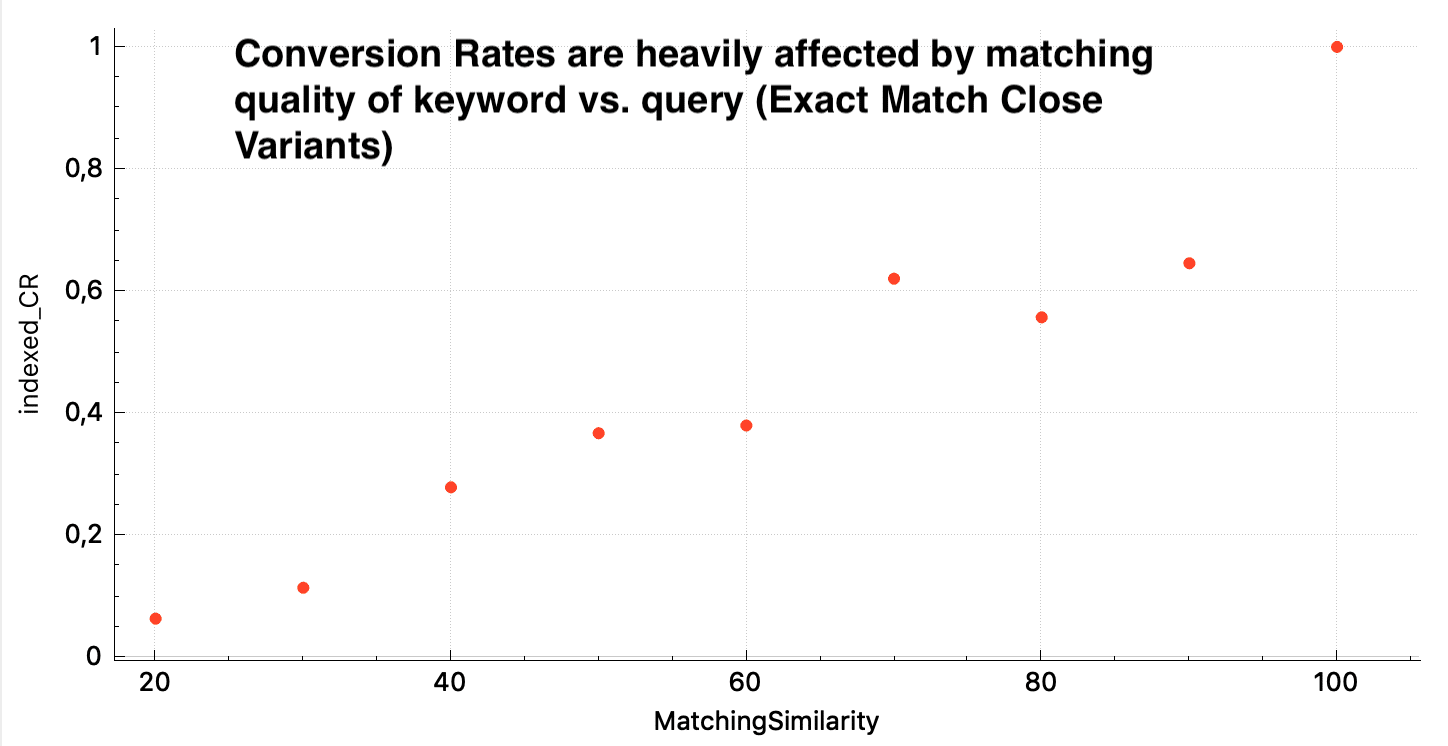

The impact of this is huge when you look at the effects of matching quality vs. conversion rates. All numbers for the chart below are for exact match keywords only:

We aren’t talking about small performance differences that would cause bid adjustments of 10%—we’re talking about lowering bids by 60% or even more based on the matching quality. We would add the matching similarity right away in my prediction model for calculating manual bids. However, we have no control about it. Only Smart Bidding has.

What can we do against it?

- Adding negative keywords to reduce close variant matches: This will be a lot of work especially when Google is making more and more fuzzy matches. At some point, negative keyword limits will be a problem. One approach could be to use n-grams for blocking the most frequent patterns within close variant matchings. The cut off point is defined by a matching similarity below X (X depends on your CVR data like shown in the chart above). The great thing about this—you can apply this for the “not significant” queries that are still visible in Google Analytics.

- Don’t trust Google regarding Smart Bidding. If we’re right with my points, we’ll face big changes when every auction player runs the same centralized bidding logic. The winner? Who is pushing Smart Bidding so hard for years? You know, everything happens for a reason.

Key takeaways

???? With close variants Google has the only system on the market that is able to bid on query level. They can pay less for fuzzy matches and more for close matches.

???? You can check the matching quality of your own data with this free online tool.

???? To gain better control, one approach can be adding negative keywords and using n-grams for blocking the most frequent patterns within close variant matchings.